Digital business transformation examples: 10 Real-World Case Studies

Feb 10, 2026 in Listicle: Examples

Discover digital business transformation examples and how AI, data, and strategy fuel growth with practical, actionable insights.

Not a member? Sign up now

Learn how to automatically tag text with the usage of Large Language Models, and what are the trade-offs between different methods!

Paulo Maia on Aug 29, 2023

Text classification is one of the most common use cases in Natural Language Processing, with numerous practical applications – now easier to access with Large Language Models. Companies use text classification in multiple scenarios to become more efficient:

All of these use cases were solvable in the past without using LLMs. However, the uprising of these models has reduced the amount of necessary training data for obtaining good results, and has also increased the average performance of these use cases, taking less time for reaching them!

In this blog post, we will cover several techniques for text classification before the uprising of the most recent LLMs (OpenAI, LLaMA, Bing, …) and after.

Download our eBook and discover the most common pitfalls when implementing AI projects and how to prevent them.

Send me the eBookThe most common techniques for text classification are:

“Let’s assume you’re an Encyclopedia, and you have to define the concepts I’m providing. Your explanation must be succinct (couple of paragraphs), like the summary section of a Wikipedia article talking about the concept. (…)”

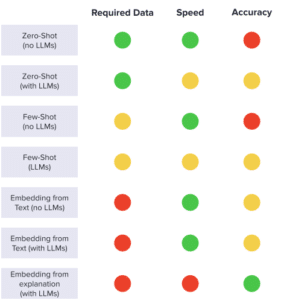

Below is a comparative chart, summarizing the trade-offs of the methods in terms of required data, speed and accuracy.

We showed you several ways of doing text classification using Large Language Models. LLMs allow you to reach acceptable performance in a few hours of work and are pretty good for an initial benchmark – despite this, don’t forget about older methods, which can be a fallback when you want faster outcomes or when paying for LLMs’ requests is not feasible in the scale of your use case.

Want to revolutionize the way you do text classification? Know more by contacting us!

Book a meeting with Rafael Cavalheiro

Meet Rafael Learn MoreLike this story?

Special offers, latest news and quality content in your inbox.

Feb 10, 2026 in Listicle: Examples

Discover digital business transformation examples and how AI, data, and strategy fuel growth with practical, actionable insights.

Feb 6, 2026 in Resources

Discover the 12 best AI tools for small business success. Our guide covers strategic insights, pros & cons, and how to choose the right AI partner.

Feb 3, 2026 in Guide: How-to

Master customer retention rate calculation with this practical guide. Learn the formulas, see real-world examples, and get actionable tips for business growth.

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |