Digital business transformation examples: 10 Real-World Case Studies

Feb 10, 2026 in Listicle: Examples

Discover digital business transformation examples and how AI, data, and strategy fuel growth with practical, actionable insights.

Not a member? Sign up now

A state-of-the-art review and a practical use case in epilepsy detection

Pedro Dias on Nov 24, 2020

Transparency is of utmost importance when AI is applied to high stake decision problems where additional information on the underlying process beyond the output of the model may be required. Taking the automation of loan attribution as an example, a client that has a loan denied will surely want to know why did that happen and how it could have been avoided. In this blogpost, we give you some examples of algorithms for Explainable AI, with a focus on Healthcare. This is the first part of our special “AI in Healthcare” month where we give special focus to the state of the art applications of Artificial Intelligence in Health.

This kind of insight may not be as easy to obtain as one would think, given that the main issue of deep learning models is that there is a lack of explicit representation of knowledge, even when the technical principles of the models are understood, thus the rising interest in explainable AI.

Furthermore, official regulations such as the General Data Protection Regulation (GDPR and ISO/IEC 27001) were created in the EU for ensuring that the widespread of automation techniques, such as deep neural networks, is made in a trustworthy manner hampering their use in business carelessly. This does not mean that these approaches have to explain every aspect in every moment, instead, the results should be traced back by demand, as explained here.

Master the fundamental ML concepts in our free course.

Learn MoreDespite the lack of a term definition within the field, the main idea is that there are two different types of understanding: understandability and interpretability are related to the functional understanding of a certain model, providing the expert user with insights of the “black box” model. On the other hand, explainability is related to providing the average user with a high-level algorithmic information allowing him to answer questions like “Why?” and not directly related with the “How?”.

Scheme of a truly explainable AI model extracted from here.

An interesting notion was introduced by Doran et al., defending that a truly explainable model not only provides a decision and an explanation, but is also able to integrate reasoning. In the figure, the model should be able to classify the provided image as a “factory” because it contains certain elements and afterwards provides reasoning supporting the decision: the association between the elements and the label should be made in an organized way, and not post classification. By this example one can easily see that in this ideal model both the “how?” and the “why?” are presented.

Explainable models can be divided in Post-hoc and Ante-hoc (or in-model techniques). The application of the first methods are done in a trained model, fitting explanations (e.g. saliency maps), whereas the latter ones are intrinsic to the model, (e.g. decision trees) as explained in the work of Holzinger et al.

The table contains some examples of techniques that are currently being used. For simplicity, only visual data will be considered and one example of each group will be detailed, so that the reader gets a clearer picture of what can be achieved. There is a lot more done of what was discussed here, for example, Singh et al. made a review on the methods currently being used to enhance the transparency of deep learning models applied to the medical image analysis.

| Post-hoc | Ante-hoc |

|---|---|

| Activation Maximization | Attention-based models |

| Layer-wise propagation | Part-based models |

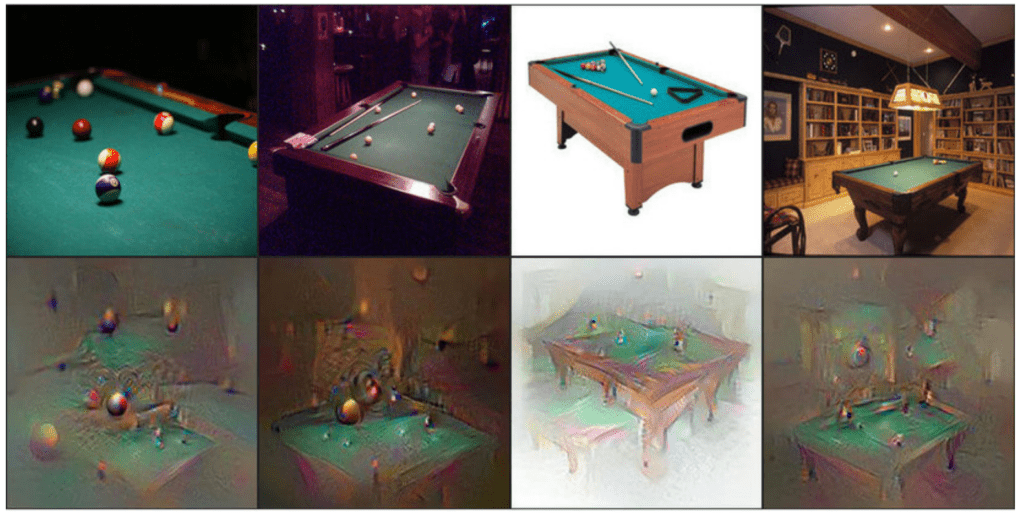

Activation Maximization visualizes the preferred inputs of certain neurons in each layer. This is done by finding the input pattern that leads to a maximum activation of a certain neuron (each input pixel is changed until the maximum is achieved). The process is iterative and starts by a random input image that is updated. The gradients can be computed using back-propagation, while maintaining constant the parameters learnt by the convolutional neural network. This way, each pixel of the initial noisy images are iteratively

changed to maximise the activation of the considered neuron until the preferred pattern image is reached. As we can see by the image, obtained from the work of Nguyen et al., the image on the right corresponds to an abstract representation of the pool tables that achieves the most activation within the network. Reyes et al. concentrated their efforts on the current state of the art regarding explainable AI and radiology. In their work they showcase how techniques like this, and others can be employed.

Layer-wise Relevance Propagation (LRP) represents by heatmaps the contribution that each pixel has in the output, for the case of kernel based models. This decomposition is rooted in a series of constraints to guarantee that the heatmap is realistic and consistent. It can be formally given as representing the output by the sum of relevances for each pixel, in the input layer. These relevances can be computed in a chain-like mechanism where the total of relevances in a certain previous layer equals the total of relevances in the next layer. This also implies a constraint in the decomposition, ensuring that the total relevance remains constant from the output layer to the input layer, meaning that no relevance is forfeited or generated.

For more examples and detail on this technique, see the work of Bach et al. from which this image was picked. This technique was recently applied by Karim et al. on convolutional neural networks applied to X-ray images of lungs for COVID-19 detection.

Attention Models are methods based on the human vision system and its focal perception and processing of objects, though they are not exclusive to computer vision problems by having applications in Natural Language Processing, Statistical Learning and Speech (see Chaudhari et al. for more on attention models and examples of them). Attention models provide a way to enhance neural network interpretability while, in some cases, reducing the computational cost by selecting certain parts from the input.

Nowadays, there are already approaches that take advantage of this idea. For example, Rio-Torto et al. proposed a network that contains both a Classifier and an Explainer. The explainer gives higher weight to the classification on the relevant parts of the input, as can be seen in the image below, (extracted from their work), where the zebra stripes are highlighted.

Part based networks are a recent architecture that is based in the human way of explaining an image in a classification task based on parts that are similar to what we, throughout our experiences, have previously seen.

Prototypical part network is an ante-hoc technique capable of obtaining explanations alongside the predictions proposed by Chen et al.. However, the explanations are in reality the sub-product of the prototypes activation on the network’s input. These prototypes are latent representations of some input part, from a certain class, in which the decision was based on considering a weight combination of these scores that will dictate the class at which the image belongs to. In the original classification task, and following the scheme below, one can see that in the prototype layer each of the prototypes are from a part of a bird, the first one is the head of a clay coloured sparrow whereas the second one is the head of a Brewer’s sparrow. This means that the network learned, for example, that the head of a clay coloured sparrow is a distinctive pattern of its specific class, and that if the input image is similar to the patterns within the prototype then it will positively contribute to the classification of the image.

During my MSc Dissertation, I worked with the Clinical Neurophysiology Group, from the University of Twente, on the automation of the explainable diagnosis of epilepsy by deep learning models. It is also worth mentioning Prof. Luís Teixeira who gave crucial guidance during my work.

Epilepsy is a neurological disorder that affects more than 50 million people worldwide whose diagnosis is based on electroencephalography (EEG) – recording of brain electrical activity. The current clinical practice includes the analysis of the EEG recordings by trained neurologists to identify abnormal patterns associated with seizures, characterized by high frequency abnormalities in the EEG signals. However, this visual analysis, apart from being subjective, is extremely laborious as it requires highly trained neurophysiologists to go through EEG signals that may have hours of recording.

A major hindrance with the application of deep learning models in healthcare is that they are often seen as “black boxes”, despite their high performance. Consequently, they provide little to no insight of the processes underlying the decision, which in the medical context is not acceptable. To tackle this, two explainable approaches were used for seizure detection where the models not only identified which portions of the signals were the most probable of having abnormal patterns but which regions contributed the most to this decision (visual explanations).

The approaches used were the two previous ones described, the Classifier and Explainer network (C&E), and the Prototype part network (ProtoPNet). Both approaches were evaluated with respect to the classification task and the explanations provided, which were directly compared to those of experts. Below we can see the EEG signals from a multi-channel montage and the overlapped explanations where the high spike regions are the most highlighted. The similarity with the explanations provided by the experts tells us that indeed both networks were able to provide relevant insights by correctly suggesting seizure related patterns associated with the decision of the model.

With the diffusion of machine learning models throughout different businesses and fields, an increase in the number of works done in explainable AI is also seen in the literature. High stake decisions are the main focus of these models, as they often require more than a decision, or prediction, to be accepted and employed safely in the desired environment, such as in this example with Epilepsy. However, one can also use these approaches in several other AI domains, to help understand really what is driving your decisions. If you have some reservations on applying AI to your business feel free to discuss with us this kind of more transparent approach and how it could help you!

Book a meeting with Francisca Morgado

Meet Francisca Learn MoreRas, Gabriëlle, Marcel van Gerven, and Pim Haselager. “Explanation methods in deep learning: Users, values, concerns and challenges.” Explainable and Interpretable Models in Computer Vision and Machine Learning. Springer, Cham, 2018. 19-36.

Doran, Derek, Sarah Schulz, and Tarek R. Besold. “What does explainable AI really mean? A new conceptualization of perspectives.” arXiv preprint arXiv:1710.00794 (2017).

Holzinger, Andreas, et al. “What do we need to build explainable AI systems for the medical domain?.” arXiv preprint arXiv:1712.09923 (2017).

Singh, Amitojdeep, Sourya Sengupta, and Vasudevan Lakshminarayanan. “Explainable deep learning models in medical image analysis.” arXiv preprint arXiv:2005.13799 (2020).

Nguyen, Anh, Jason Yosinski, and Jeff Clune. “Multifaceted feature visualization: Uncovering the different types of features learned by each neuron in deep neural networks.” arXiv preprint arXiv:1602.03616 (2016).

Reyes, Mauricio, et al. “On the Interpretability of Artificial Intelligence in Radiology: Challenges and Opportunities.” Radiology: Artificial Intelligence 2.3 (2020): e190043.

Bach, Sebastian, et al. “On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation.” PloS one 10.7 (2015): e0130140.

Karim, Md, et al. “Deepcovidexplainer: Explainable covid-19 predictions based on chest x-ray images.” arXiv preprint arXiv:2004.04582 (2020).

Chaudhari, Sneha, et al. “An attentive survey of attention models.” arXiv preprint arXiv:1904.02874 (2019).

Rio-Torto, Isabel, Kelwin Fernandes, and Luis F. Teixeira. “Understanding the decisions of CNNs: an in-model approach.” Pattern Recognition Letters (2020).

Chen, Chaofan, et al. “This looks like that: deep learning for interpretable image recognition.” Advances in neural information processing systems. 2019.

Like this story?

Special offers, latest news and quality content in your inbox.

Feb 10, 2026 in Listicle: Examples

Discover digital business transformation examples and how AI, data, and strategy fuel growth with practical, actionable insights.

Feb 6, 2026 in Resources

Discover the 12 best AI tools for small business success. Our guide covers strategic insights, pros & cons, and how to choose the right AI partner.

Feb 3, 2026 in Guide: How-to

Master customer retention rate calculation with this practical guide. Learn the formulas, see real-world examples, and get actionable tips for business growth.

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |